Architecture

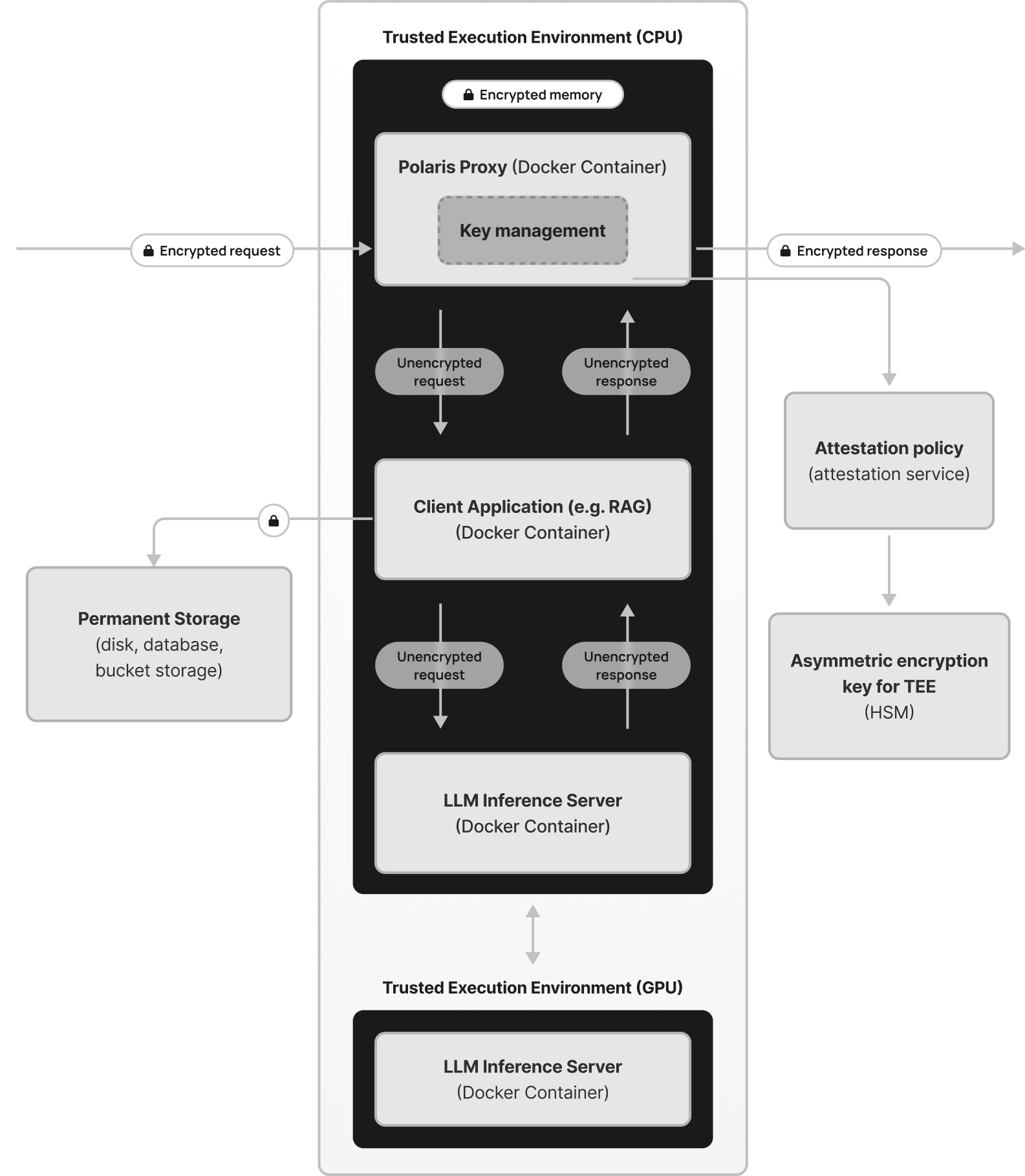

Each Polaris container contains an instance of the Polaris Secure Proxy and the client workload container. Please refer to the diagram below for a visual representation.

Trusted Execution Environment (TEE)

The Polaris container is designed to run inside a Trusted Execution Environment (TEE) to ensure data is encrypted at all times, including while in use. This is achieved by provisioning Polaris inside a Confidential Virtual Machine (CVM) based on AMD SEV-SNP technology. The exact implementation depends on the cloud service provider being used. For details, please refer to the Cloud Providers documentation.

Confidential Virtual Machines provide full memory encryption with minimal overhead, protecting data in the machine's memory against malicious hypervisors or other VMs running on the same host. The encryption is hardware-based and does not require any changes to the workload running inside the VM.

Polaris Secure Proxy

The Polaris Secure Proxy sits in front of the client workload and acts as an HTTP proxy server. It is implemented in TypeScript using the efficient express-http-proxy library. The proxy can be configured to enable transparent decryption of input requests and encryption of responses, supporting encrypted communication.

For details on configuring the Polaris Secure Proxy, please refer to the Polaris Secure Proxy documentation.

LLM Inference Server in TEE (CPU)

The LLM inference process within Polaris begins inside the Trusted Execution Environment (TEE), where sensitive data is processed securely before being sent for model execution. This server is responsible for handling input preprocessing, which includes operations such as prompt optimization, sensitive data filtering, and request validation. Since the TEE provides a protected runtime, all computations performed within this environment remain secure, ensuring that no unauthorized access to plaintext data is possible.

By executing these initial stages inside the TEE, Polaris guarantees that only sanitized and non-sensitive data leaves this secure enclave. This step is crucial for maintaining confidentiality, as it prevents exposure of sensitive inputs during further processing. Once preprocessing is complete, the refined data is forwarded to the GPU-based inference server for model execution.

LLM Inference Server in GPU

The GPU-based LLM inference server is responsible for executing the actual model computations required to generate responses. Unlike the TEE-based server, this component operates outside of the secure enclave, allowing it to leverage the full computational power of the GPU for efficient and high-speed inference. Since only preprocessed, non-sensitive data reaches this stage, security risks are minimized even though the execution occurs outside of the TEE.

Once the model generates an output, the response is sent back to the TEE for post-processing. This ensures that any final modifications, encryption, or additional security measures are applied before the response is returned to the client. By separating inference execution between the TEE and the GPU, Polaris achieves both strong data protection and high-performance model execution, ensuring that sensitive information remains secure without sacrificing processing speed.

Permanent Encryption Key

The proxy also manages access to the private keys used to decrypt data inside the TEE. The proxy provisions an ephemeral 4096-bit RSA public/private key pair, which is stored in memory. The private key is only accessible within the TEE and is never exposed externally.

For enhanced security with permanent keys, Polaris LLM integrates with an external key management solution that securely stores a 4096-bit RSA private key inside a Hardware Security Module (HSM). The private key is accessed only through an attestation policy, which ensures it is used exclusively inside a TEE. The key management service and attestation policy rely on services provided by the cloud provider. For more information, see the Cloud Providers documentation.

Having a persistent decryption key allows encryption and decryption of data stored permanently in a bucket, on a disk, in a database, or any other storage medium. For implementing encryption and decryption from the workload container, please refer to the Polaris SDK documentation.